Weird New Tech Gadgets From Mobile World Congress

Click Here! TO MAKE MONEY FROM MOBILE APPS - powered by clickbank

Things Got A Little Weird This Week In Barcelona.

Every year the Mobile World Congress, which took place this week in Barcelona, showcases some of the newest and the fastest in mobile technology. But when nearly all of the world’s biggest smartphone manufacturers get together and jockey for media attention, things can get a little bizarre.

Here are a few of the strangest finds:

Samsung’s IOFIT smart shoes (from $199)

Help perfect your golf swing or up your squat game. That’s the promise of the IOFIT smart shoes from Samsung. Like a fitness tracker for feet, the shoes measure movement via embedded accelerometers and also have pressure sensors to gauge balance, weight shift, and center of gravity. People wearing them can analyze their form via a connected smartphone app. But the tech would be even more useful if the app pushed that information back into the shoes. For example, it would be great if the shoe could make your toes vibrate to indicate how to perfect a swing or improve your squats at the gym.

here a video link

Most Useful Podcast Ever: Finally, Some Totally Wireless Earbuds That Work And work well to boot.

By Alexander George

To hear more from the editors of Popular Mechanics, download this week's episode of The Most Useful Podcast ever here, and be sure to subscribe and comment on iTunes!

For years we've been hearing that completely wireless Bluetooth earbuds—basically just earplug-style headphones—are just around the corner. At least that's what it feels like. I'm talking about designs on par with your typical, good quality $50 earbuds, just without any wires at all but with little charging carrier cases instead. Think Her.

None of the half-dozen or so models we'd seen in prototype form at CES or on Kickstarter ever seemed to materialize, so we called every manufacturer of Bluetooth earbuds we could find. Of the many, only a few had what seemed to be realistic plans for production and eventual distribution. Fewer had actual test units ready.

It's a bummer, because the proposal is enticing. Potentially a huge upgrade over every other method of privately listening to music. A lot of other Bluetooth headphones are over-ear, big enough for the requisite sensors, ore they include some sort of weird hub-like plastic that rests like a collar on your neck. On top of that, they're rare enough that they still present as kind of dorky. But man, once you go without wires, it's bliss. The in-line microphone doesn't catch on your jacket zipper, and, most of all, there's nothing to untangle. No. Tangled. Wires.

That's why the first hints of availability of the Erato Apollo 7 headphones has me so excited. These go on sale in June, and, despite the fact that I've only spent time with a working prototype, I'm confident these are good enough to validate this category of product. I'm going to have a hard time going back to my beloved $25 buds.

The process for pairing is the same as anything Bluetooth — hold down the one button on each earbud for a long time, until a British woman's voice tells you that it's pairing. Pick the Apollo 7s from the Bluetooth section of your phone's Settings, and you're ready to go. It's a little more complicated than plugging headphones into your phone's 3.5mm jack, sure, but once you pair the first time, your phone will recognize them in the future.

When it's time for some music, you just remove them from the charging cradles in their carrying case. Put the right one in your ear, and hold down the button for two seconds, until British lady says, "Power on," then, "Connected." Put left earbud in ear, "Power on," "Connected." Then, after a split second of lag, the music kicks in and your good for about 4 hours of use before you need a charge again at which point the case, with a battery of its own, is there for you.

When it kicks in, though, it's glorious. The sound is great, so far as earbuds go, and they fit in my ears just fine; I've got no fear they'll fall out. And though the connection isn't always perfect—it's cut out ones or twice for a few seconds here and there—putting my phone in my back pocket didn't interrupt the stream, which is a problem I've had even with $400 Bowers & Wilkins P5 Wireless headphones, or corded Bluetooth earbuds like Beats or Jaybirds.

The question, of course, is whether you're at risk of losing these little guys. And maybe here is a good place to point out that they're $300. I haven't lost mine yet; when I take them out, I put them right into the charging case, which is about the size of a Tic-Tac container. Small enough to always have around and big enough to never lose. Just don't take them out when standing over a sewer grate. I'll admit it's conceivably a problem, but not one I've had. You just have to be careful.

The only thing I'd change, besides the price, is the blue LED that blinks every three seconds when they're engaged. It's the one thing left tying these new-school future 'buds to the dadtastic Bluetooth headsets of the past.

Flexible Phone Lets You See and Move Objects in 3D

A pretty good reason for a bendy phone!

By Joe Svetlik

We've seen 3D phones before, we've seen bendy phones before, but this is the first time the two technologies have come together in the world's first holographic flexible smartphone., and the results are pretty dang cool.

The phone has been dubbed HoloFlex. It's a research project from the Human Media Lab at Queen's University in Canada. Its screen shows 3D images and videos but doesn't require the viewer to wear 3D glasses, or stand in the 'sweet spot' either—the images will appear to have just as much depth if you're at an angle to the phone. That's pretty rare in the world of 3D.

So how does it work? Images are rendered into 12-pixel wide circular blocks (these allow the image to have depth when seen from any angle) that project through a 3D-printed flexible micro lens array made of over 16,000 fisheye lenses. it also has a bend sensor which lets you bend the handset to move objects along the z-axis.

In other words, you can swipe to move things on-screen as normal, and then bend the phone to bring objects out of the screen. Sadly the phone is purely in the research stages at the moment. But maybe the technology will find its way into consumer someday.

here a video link

flexible smartphone

From: Digital Spy

Hey Siri, Can We Talk?

Apple, Amazon, Google, and others are pushing us to use our voices rather than our fingers to interact with their products. But do we really want our assistants to be more human?

By Rachel Z. Arndt

Not long after Radiohead's 1997 masterpiece Ok Computer came out, I sat in the small upstairs office of my house and sang aloud to the album's least tuneful song, "Fitter Happier," a track in which the lead vocalist is a monotone computer voice that reads aloud ways to become healthier and more productive. By "sang along," I mean I made my computer sing along: I typed out the words and hit play at the exact right moment so the computerized voices (both Apple's Fred) fell in line with each other.

I loved making machines talk. Some years before that, I typed words into a 90s program called Kid Works, which you could use to have a man's voice read aloud short bits of text. "Rachel's dog 10" I typed, a reference to a drawing I just did with a mouse. "Rah-chel-S- dog-ten" the computer read back to me, the deep, scratchy man's voice sounding tinny from the Macintosh IIsi built-in speaker.

When I first learned to make a computer talk, I had to tell it exactly what to say. The only surprises came from the machine's curious mispronunciations. Nowadays I make my gadgets converse with me by rousing them with my voice and waiting to hear what they come up with in response. The difference is so stark I have to remind myself I'm chatting with a machine. When my phone's virtual assistant gets something wrong, I have to correct myself: She didn't get it wrong—it got it wrong. (I actually have my Siri set to use a man's voice, so there's a double layer of wrong happening here.)

This distinction should be easy. Yet when things talk to us and when we talk to them, we have human expectations for them. The more natural our conversations are, the thinking goes, the more we'll talk. And the more we talk, the more our artificial assistants will be able to learn from us, become like us, and make conversation with us more naturally. Jim Glass, head of MIT's Spoken Language Systems Group, puts it this way: "If you make the interaction more like human-human interaction, humans might not have to think as hard about how to express themselves, because the computer would be behaving in ways a human would."

That's the goal, at least, and that's why Apple, Amazon, Google, and others are pushing us to use our voices rather than our fingers to interact with their products. Talking is easier and faster than typing. Voice assistants like Siri have been on the market for years and are getting better all the time. So why do they still feel so weird to use? Why does it feel so uncanny to talk to an inanimate object and to expect it to talk back in your own language?

I've long been resistant to using Siri. Very simply, I'm embarrassed. Talking to an object is embarrassing in a way that, say, talking to a stranger's dog on the street is not. Maybe it's the mundanity of what I'm saying, asking Siri boring questions about what the weather is like today. Or maybe it's that the mundanity touches something deeply personal—the dictation of a text message saying "I'm on my way," or the question to Google about what I should eat tonight. Talking to a voice assistant feels a bit like speaking a foreign language to a native speaker. "For some people," Glass says, "having the machine try to be like a human can be weird." Indeed.

Eventually, I gave in. It happened when I moved to a place where I drove after years of riding the subway. I'd often wonder what song was on the radio, and Siri could tell me. Or I'd think of something I needed to get at the grocery store, and I'd have Siri add it to my list. But it was when I started wearing an Apple Watch that I really gave in. It's just so convenient to raise my wrist to my mouth and tell Siri to remind me at 4 to call the cobbler.

There are now devices out there that let you control your whole smart home without lifting a finger. Tell your house to become 72 degrees, and it will. That's a nice hands-free trick, but that's what it is: a shortcut, not a conversation. Something else is now happening. Tell the speaker to play more music that sounds like the National, and it will. Not only will it do that, but it'll learn what you mean by "play more music that sounds like the National"—that you mean this band but not that band. It'll recognize you as you. How is this even possible?

Now that Siri and its ilk have been around for a few years, it's become easy to forget just how much it takes to make even this level of voice control happen. There are three huge steps working here.

First, the device has to be able to hear you. It needs a decent microphone, one that can pick up your voice amidst a collection of other sounds. Next, it needs to be able to understand you—that is, it needs an algorithm that can identify the sound coming from you as speech and translate that speech into text. Often, this step involves recognizing keywords, like "what" and "weather" and "San Francisco." Good voice assistants can understand unconventional pronunciations; after you've said a word enough times, a voice assistant will understand you always say it that way. It doesn't matter if the pronunciation isn't standard, only that the pronunciation is consistent. Last comes the step that separates a computer assistant from a mere computer voice. It needs to understand what what you're asking for, and it must be able to beam and receive data from the cloud to give you the response you're asking for.

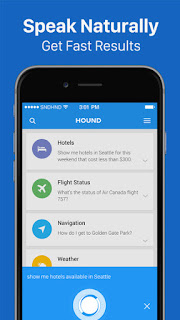

All mainstream voice assistants are decent at these three tasks, but each has its strengths. Siri and Google Now both learn a user's voice and understand what the user wants fairly well. But they can have a hard time picking up a voice amidst the daily cacophony. It's no surprise that Amazon's Echo—it's a speaker, after all—picks up the slack there. But the Echo is limited by the apps it is connected to (it won't work with iCloud, for example) and by its size—you won't take this thing to the bar and ask it about basketball scores. And even if you could, it might not be able to answer, as it seems to be designed for more home-related tasks, like ordering pizzas. Hound, SoundHound's voice-controlled search engine, actually does speech recognition and natural-language processing at the same time, in a process it calls "speech-to-meaning." The app is currently the best at understanding complex requests, like "Find me a coffee shop with Wi-Fi that serves beer within a mile of my house.

"IF YOU MAKE THE INTERACTION MORE LIKE HUMAN-HUMAN INTERACTION, HUMANS MIGHT NOT HAVE TO THINK AS HARD ABOUT HOW TO EXPRESS THEMSELVES."

Whichever voice assistant you use, you probably had to talk to it for awhile before it began understanding your requests on the first try. Some of us have gotten used to the idea of talking to our gadgets. But many of us still haven't gotten used to how we have to talk to our gadgets.

Whether or not we think about it in such cold and rational terms, human language is a means of information retrieval. A conversation can be angry, witty, romantic, or tense, but talking to another human being is fundamentally about exchanging information. It's a collection of inputs and outputs, if you think about it as you would with a machine.

Yet the human voice may be the least intuitive input device for interaction with machines simply because it's the most intuitive input device for interacting with each other. We already know talking so well that it's a primarily unconscious endeavor. I've spent my whole life figuring out how to communicate with other people by talking, and most of my life figuring out how to communicate with machines using my hands. Now I'm supposed to sit back and command a speaker or phone to do my bidding, expecting a machine to deliver a mechanized (and, I hope, accurate) response behind the veneer of a human voice. And when I command that phone, I have to use the right phrases. I'm simultaneously supposed to talk to the device naturally—since I'm using my voice—and unnaturally, since I know its ability to understand me (and the realm of answers it can provide) remains limited. Countless memes and videos are dedicated to Siri easter eggs, the quirky canned responses Apple has programmed it to give when you ask the assistant a question it cannot answer.

Things are getting better—meaning voice assistants are getting a lot more human-sounding. "The convenient thing to do is to mimic the human model so you're bringing along what you already know about communication," says Nuance CTO Vlad Sejnoha. But today, "it's like interacting with five-year olds." That's partly due to the very means of communication—language, which is wonderful, but ambiguous. So it can be hard both for voice assistants to understand what we're saying and for us to understand what they're saying back—or at least why they're saying it. Why, for instance, does Siri default to a Bing search when I ask it "What year was Iowa City founded?

Part of the problem is a lack of "introspection," Sejnoha says. AIs need to know what they don't know, and they need to be able to explain it. When they can do that, Siri still may default to a web search, but it'll at least tell you why it's doing that, just as a person might tell you why she doesn't understand your request.

The real question of the moment is whether Siri should be more human. On a practical level, sure. It'd be helpful if Apple's voice assistant were better at understanding what you were asking. For Silicon Valley prophets who foresee seamless interaction between human and gadget, that's probably a no-brainer. Yet we might grow too uncomfortable as our devices fall deeper and deeper into the uncanny valley.

For another thing, teaching a machine to learn and "think" as a human does may hold it back. The magic of voice assistants is that they talk to us as if they were human, yet they have instant access to a vast reservoir of knowledge beyond the hope of a mere mortal. I can't tell you when the next train is coming, nor can I seem to remember how many tablespoons are in a quarter cup, but Siri can.

The ideal may be a voice assistant that understands us as a human would while responding as both a machine and human would. A machine we can talk to in a natural voice, without having to rephrase our thoughts in a way a computer would understand, but that has access to vast quantities of information and runs many tasks simultaneously. Something that is human, but not too human.

"In the future, we will have complex conversations with the things around us," says Keyvan Mohajer, founder and CEO of SoundHound. "The more these things become humanlike, the more people will adopt them—and will feel comfortable about adopting them." Maybe so, but don't expect things to be perfect along the way. "If they sound like humans, it increases the expectations for what they can do. People might start asking subjective questions, like, 'My girlfriend broke up with me, and I'm sad—what should I do?'" Mohajer says. "But in the long term, machines will be able to handle all those questions."

Until then, we'll have to make do with over-enunciating and dead ends. We'll have to make do with using the most human of inputs—the voice—and getting something not quite human in response.

Super Speedy New Voice Assistant "Hound" Leaves Siri In the Dust

The new "Hound" app can handle commands that are so long and complicated that it is absurd.

By Eric Limer

Ever felt let down by Apple's Siri, Google's Google Now, or Microsoft's Cortana? Well there's a new voice assistant you can try, and it is intense. Not only is it lightning fast, it can support all kinds of search commands, even ones with countless ifs, ands, or buts.

Watch the video above and you'll get the idea quick, but Hound is a search app designed to help you find the very specific. Not just coffee shops. Not just coffee shops near you. Not just coffee shops near you with free Wi-Fi. Not just coffee shops near you with free Wi-Fi that are open before 8 AM on Tuesdays. Not just coffee shops near you with free Wi-Fi that are open before 8 AM on Tuesdays and aren't Starbucks. But coffee shops near you with free Wi-Fi that are open before 8 AM on Tuesdays and aren't Starbucks listed by rating.

Hound's superpower—aside from sheer speed of response—is the ability to recognize all these nested requirements and parse them correct, no matter what order you say them in or how muddled the command gets. Just talk to it like a normal human and boom. Although you'll probably have to think for a second to get all your criteria in one sentence that comes out smooth on the first try.

But while Hound is great at very specific searches for restaurants, hotels, or weather, it does lose out to the competition in some areas. Google Now can answer "Who won the Republican primary in Alaska last night?" Hound can't. When I ask "Where can I get a good Vietnamese sandwich around here?" Google Now returns a Yelp list of local shops whereas Hound does a search for restaurants in Sandwich, Illinois. So yes, Hound can take some impressive commands, but you still have to talk to it in a way that it expects.

Still, if you want to try it out yourself—and you should—it's now available on iOS and Android for free. You've got nothing to lose! And the next time you need to find a coffee shop near you with free Wi-Fi that is open before 8 AM on a Tuesday and isn't Starbucks, you'll thank yourself.

Source: Soundhound

If you find this post interesting, do share it on Facebook & Google plus.

Thank you for sharing knowledge and thanks for fantastic efforts

ReplyDeletefire fighting academy

Firefighter I *

Airports firefighter

Fire investigator

ReplyDeletethanks for any nice information

sai

ادارة الموارد البشرية

الخرائط الذهنية