By:

Google Webmaster Tool (GWT) is the best free SEO tool you can get for your blog. For any level of blogger, GWT will allow you to address the complete health of your site, and will help you to implement various optimization techniques for your blog. We have covered few of these in the past, but in case you are just getting started, here are some articles to bring you up to speed,:

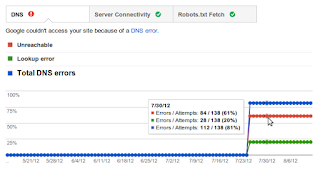

A few days back Google added a beautiful chart in their Webmaster Tool showing the site crawl errors rate and what causes those errors. This is indeed a very useful tool, and I am going to quickly show you what you can determine from these errors, and possible solutions to repair the site crawl error warnings using Google Webmaster Tool.

Getting started with site crawl errors in GWT:

Login to your GWT account, and under your site dashboard, click onHealth > Crawl errors. This page will display a graph showing a list of errors and what caused those errors. Since my blog is not facing such crawling issues, I’m using the image from the official blog post. So the detection of various crawl errors will look something like this:

At the time of the writing of this post, Google Webmaster Tool shows three types of errors:

If you see the errors detected in your chart growing like those shown in image displayed above, remember that it is your responsibility to fix all of these errors in an effort to allow the bots to crawl your site effectively.

Let’s have a look at the various types of possible errors:

Types of Google site errors

On the page showing you the detected errors, you will see a list of links that are affected. Once you have solved the issues, you can select individual links and mark them as fixed.

DNS errors:

These are like the normal DNS issues we face on our system when our Internet connection is unable to open an address, and displays a notice of a DNS error in the browser window. This can happen for any one of many reasons, including when you change your hosting service or make name server changes.

Since the replication of DNS take time, a DNS error often results. In many countries bots can’t access your site due to a domain name server failure, and you get a similar error as a result. Remember that you can check the ping status of your site in different countries using a service like Just-Ping.

A few days ago I moved ShoutMeLoud from Hostgator to Knownhost VPS, and I could see my DNS errors increasing. Ultimately I received this email from GWT:

“Over the last 24 hours, Googlebot encountered 66 errors while attempting to retrieve DNS information for your site. The overall error rate for DNS queries for your site is 1.5%. You can see more details about these errors in Webmaster Tools.”

Since I knew the reason for these errors, I didn’t bother with it, and I currently have zero DNS errors.

DNS errors found in Webmaster Tool can also be due to high latency, and this is usually related to an issue with your hosting service. If you are constantly getting a high rate of DNS errors, you should contact your host’s technical support and ask them to look into it. If they can’t fix the issue, simply move to a better hosting service instead of blaming the host or Google for such errors.

server connectivity error :

This issue is generally related to your server configuration, where Google bots can’t crawl your site, or your site times-out before loading the page. This usually happens when a page requires too much time to load, or your server’s resources are exhausted. One easy way to fix this issue is to control the crawl rate of bots. GWT doesn’t offer you a pretty graph likeBing Webmaster Tools to control the crawl timing, so you can use the Webmaster Parameter Tool and Robots.txt to control the crawling.

Make sure your server is not underpowered. If it is, move to a hosting service with more resources. If you are using self-configured VPS ordedicated hosting, make sure your firewall and security settings are not blocking access to search engine bots. You can read more about this on the official page here. If you are not using a caching mechanism of some sort, I would recommend using any of these WordPress cache plugins, which will help you to deal with server connectivity errors.

robots.txt fetch errors:

This is a common error that occurs because of misconfigured robots.txt files. Bear in mind that robots.txt is used to stop the crawling of bots to certain areas of your site which you don’t want them to index, usually your wp-admin folder and its parameters, if you are using it. I recommend not to stop the crawling of your tags and categories pages using robots.txt, as crawling and indexing are two different things. We have already written a lot about this in the past, and you can refer to the following articles for further information on this concept.

Always remember that some of the errors you will be seeing are temporary. For example, server connectivity errors can happen because of server load, and thus may not be permanent. But if you see errors consistently, or if you’re getting email from GWT regarding crawling issues, you should start working on repairing the issues you are seeing.

No comments:

Post a Comment